What can we do to help people spot AI-developed content?

Just a few years ago, to spot problematic content online one could use a few simple tests: Do the images or video look fake? Is the audio mechanical or robotic? Is the text full of typos or misspelled words? Usually content that failed those simple tests would be considered suspect and a viewer might discount the validity of such content.

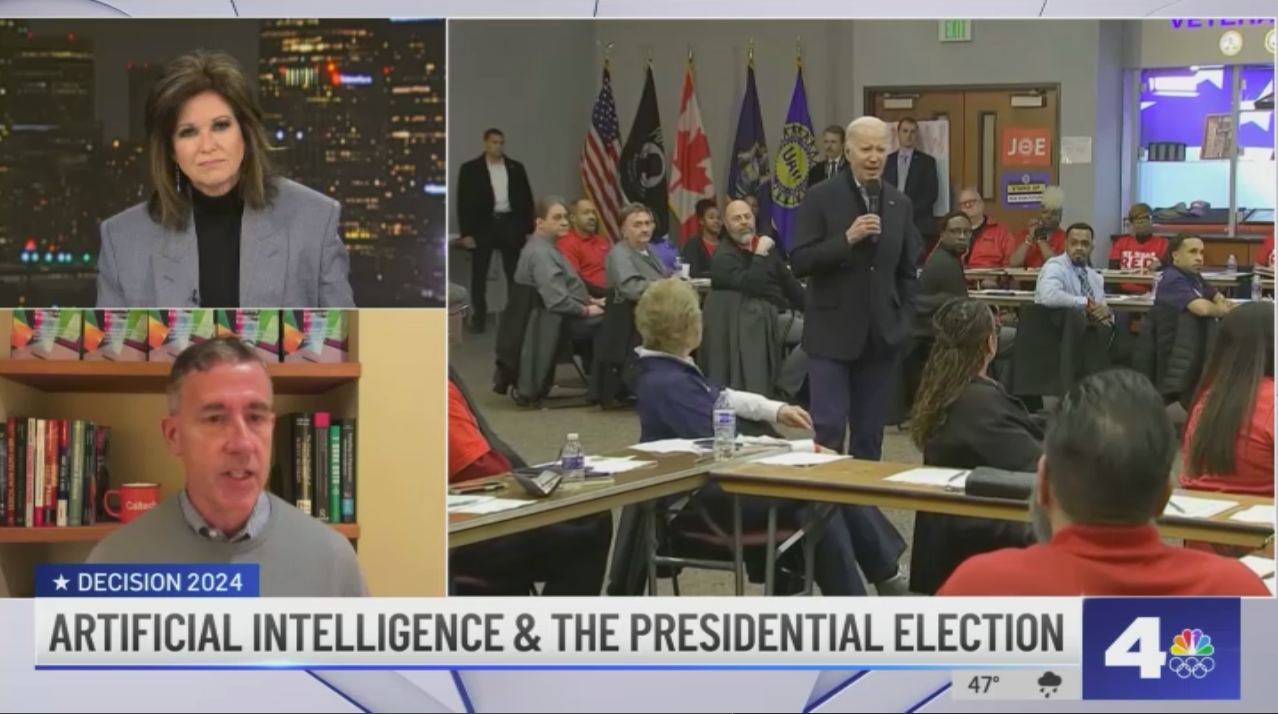

But generative AI and Large Language Models (LLMs) have leveled the content-generation playing field. When it comes to producing very realistic content to post online, it can often be a matter of using some simple off the shelf applications and thirty minutes of time, as we demonstrated in a recent white paper. This is creating a lot of concern about highly-realistic misinformation as we head into the 2024 US Presidential election cycle, as I discussed in the recent NBC4 interview shown above.

As I discussed briefly in the interview, social media and other online platforms should do more to help information consumers recognize problematic online content, in particular content that has been produced by generative AI and LLMs. I thought it was a good news to hear that Meta is now asking their industry to label content generated by AI. But how quickly the industry might take on that task, how user friendly the labeling will be, and what exactly will be labeled, is still unclear. But let’s hope that the social media and other online platforms act quickly to label AI-made material, before the production and distribution of problematic and misinforming materials about the 2024 election proliferate.